Adobe: From PostScript to Agentic AI – What’s Next?

- Stuti Chopra

- Aug 1, 2025

- 8 min read

Updated: Aug 10, 2025

A Legacy Forged in Innovation

Adobe’s journey began in the mid-1980s with the development of PostScript, transforming digital printing and ushering in a new era of desktop publishing. PostScript enabled printers and other output devices to understand exactly how to display text, images, and graphics on a page. Instead of sending a picture of a page to a printer, PostScript sends instructions, allowing for precise, high-quality output, especially for professional publishing. It revolutionized desktop publishing because it allowed documents to be printed exactly as they appeared on screen, regardless of the printer used. This led to the creation of the first LaserWriter printer by Apple (which used PostScript) and popularised tools like Adobe Illustrator and PageMaker. Further, through acquisitions like Photoshop (1995), FrameMaker, and Premiere, Adobe built a creative powerhouse, but its most revolutionary invention came in 1993: the Portable Document Format (PDF).

Fig. 1: Adobe Acrobat Reader to work with PDFs in Acrobat on desktop and browsers

Initially, PDF was originally developed as a proprietary format by Adobe, however, over time, as PDF became widely used for document sharing and archiving, Adobe decided to make it more open and accessible. In 2008, PDF was officially published as an ISO standard (ISO 32000-1), an internationally recognized open standard, and no longer solely controlled by Adobe. Before PDF, sharing documents across different operating systems and software was a cumbersome and often frustrating process, with formatting inconsistencies and font issues being commonplace. PDF solved this problem by encapsulating all the elements of a document, such as, text, fonts, images, and layout, into a single, platform-independent file. This invention revolutionized document sharing, archiving, and printing, becoming an indispensable format for businesses, governments, and individuals worldwide.

Adobe Summit 2025

At Adobe Summit 2025, Adobe revealed a powerful lineup of technologies and products that reflect its deep commitment to AI-driven customer experiences, scalable content creation, and intelligent marketing. Adobe is moving from generative AI to agentic AI, which is autonomous, goal-driven systems that act, learn, and collaborate across platforms. Further, the company unveiled transformative AI-powered advancements aimed at Customer Experience Orchestration (CXO). Central to this vision is the Adobe AI Platform, uniting in-house and third-party models within the Adobe ecosystem. The spotlight: Adobe Experience Platform Agent Orchestrator, a unified system that deploys intelligent agents to boost personalization and operational efficiency, across functions like site optimization, content resizing, data cleansing, and audience targeting.

Product Spotlights: What’s New?

The most significant launch was the Agent Orchestrator within the Adobe Experience Platform. It introduces a system of ten purpose-built AI agents, each designed to manage a specific task—such as site optimization, content personalization, and audience segmentation. These agents can operate independently or coordinate in real-time, enabling continuous, adaptive customer journey management without constant human input.

Fig. 2: Agent Orchestrator within the Adobe Experience Platform.

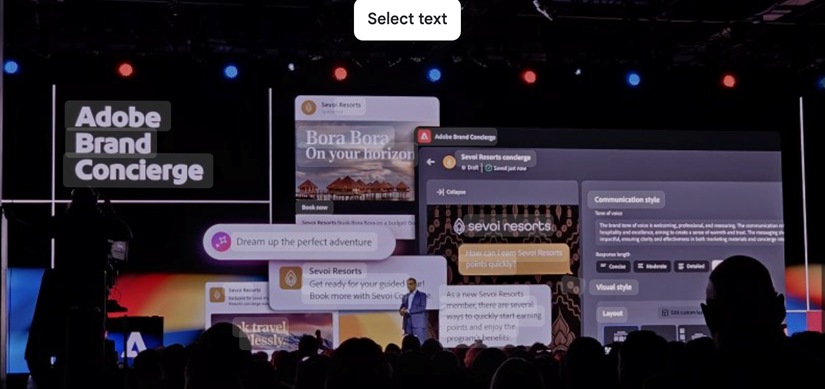

Further, Adobe launched Brand Concierge, a customer-facing, AI-powered assistant that uses multimodal interaction (voice, text, visuals) to guide users across discovery, purchase, and loyalty stages. It draws on first-party data and Adobe’s content engine to ensure brand-safe, hyper-personalized interactions across channels, aiming to function like an intelligent brand representative.

Fig. 3: Brand Concierge, a fully agentic application leveraging a customer’s first party data and seamlessly integrating with the Adobe Digital Experience ecosystem.

Furthermore, Adobe significantly expanded its Firefly suite with new developer APIs, enabling automated creative workflows:

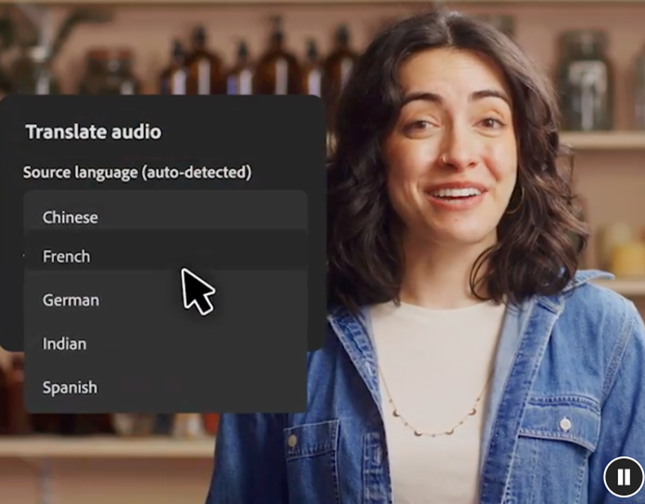

Translate & Lip Sync: Automatically localizes video content with synced voice and subtitles.

Reframe: Adjusts video and image dimensions for multiple formats and platforms.

3D Generation Tools: Allows fast creation of realistic 3D assets.

Custom Models API: Lets brands train Firefly models with their own styles, ensuring brand-consistent outputs.

Fig. 4: Translate video with AI – Adobe Firefly

For content teams, Adobe introduced upgrades to GenStudio Foundation, a tool designed to automate every stage of campaign creation—from planning and asset generation to publishing—while ensuring brand consistency. In Adobe Experience Manager (AEM), new features such as generative metadata tagging and natural language search streamline asset management, while the Sites Optimizer tool uses AI to detect and resolve SEO, accessibility, and performance issues with minimal human effort. Journey Optimizer also received a boost with AI-powered experimentation capabilities, while Customer Journey Analytics now includes agents that analyze and summarize multi-channel engagement patterns. Adobe’s broader strategy was further reinforced by expanded integrations with enterprise platforms like Microsoft 365, SAP, ServiceNow, and AWS, embedding its AI technologies deeply into everyday business operations.

Fig. 5: Adobe expands GenStudio Content Supply Chain Offering for Marketing and Creative Teams to Tackle Skyrocketing Content Demands with AI.

Key Technologies in Adobe’s Latest Patent Filings

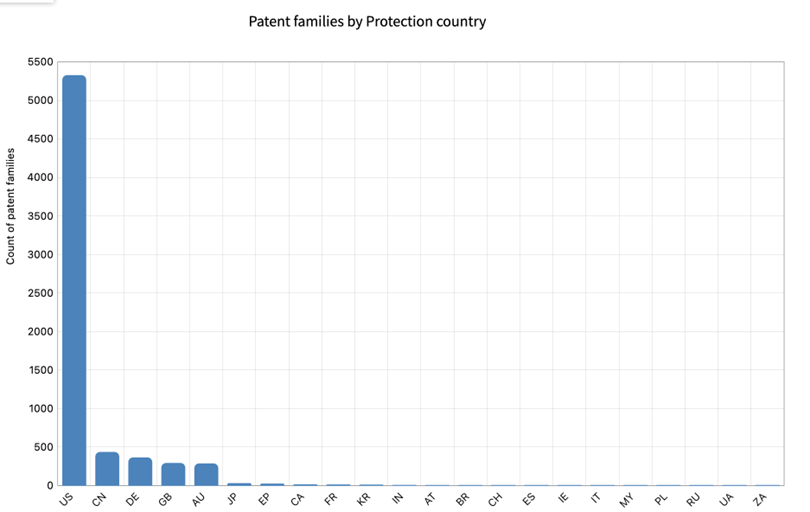

Adobe holds over 5,400 currently active patent families worldwide. The U.S. tops the filing list, with strong presence in China and Germany. Further, Adobe has filed the highest number of patents in Computer technology, with nearly 5000 filings. It underscores Adobe’s strategic focus on innovations involving software, artificial intelligence, data processing, and cloud-based platforms. This aligns with the company’s core business model, which revolves around digital media, creative software, and enterprise solutions that heavily rely on advanced computing.

Fig. 6: Adobe holds over 5,411 currently active patent families worldwide, with significant presence in the US.

Fig. 7: Adobe has filed the highest number of patents in Computer technology.

Adobe’s Technology Trajectory Based on Patent Filings: 2022–2025

Recent patent filings by Adobe reflect its strategic push into advanced AI-driven technologies. These include innovations such as AI-powered marketing co-pilots capable of generating entire campaigns autonomously, showcasing Adobe’s intent to streamline and automate creative workflows. Additionally, the company has focused on securing core intellectual property around agentic AI orchestration, multimodal content generation, and advanced video processing technologies. This includes developments in video extension, translation workflows, and video-to-video transformation capabilities—many of which align closely with the product announcements and technology roadmaps unveiled during Adobe Summit 2025.

Adobe’s recent patent activity reflects a clear trajectory: moving from assistive AI tools to fully generative, user-steerable systems that redefine creative workflows across its flagship applications. The technologies being developed demonstrate Adobe’s commitment to deeply integrated, real-time AI systems that support personalization, multimodal processing, and context-sensitive editing—enabling a future where creativity is accelerated by intelligent automation.

Among the standout technologies is image relighting using machine learning, which enhances photo and video aesthetics by intelligently adjusting lighting based on scene semantics and spatial composition. This is particularly relevant for content creators looking to simulate professional studio-quality lighting in post-production. Closely aligned with this is context-aware image modification, which allows edits to consider not just selected objects, but their relationship with surrounding elements—enabling more intuitive operations like background-aware object replacement or dynamic scene relighting, especially within Photoshop and Lightroom.

In the vector design space, Adobe is advancing scribble-to-vector generation using diffusion models. This allows rough, freehand sketches to be transformed into polished vector illustrations, significantly reducing the gap between ideation and execution. Such innovation aligns closely with Adobe Illustrator’s mission, allowing users to convert informal input into professional-grade assets. Supporting generative workflows further, presentation generation using knowledge graphs introduces a mechanism for automatically producing branded content such as business slides, tailored to corporate themes and data inputs—ideal for Express and Acrobat integrations.

On the AI model level, Adobe is tackling the challenge of controllability through knowledge editing in text-to-image systems, empowering users to influence or correct how AI models interpret specific concepts or entities. This capability not only enhances creative precision but also supports ethical image generation by enabling bias correction and factual refinement, especially within tools like Adobe Firefly. In parallel, modality-specific learnable adapters are being developed to optimize learning in multimodal systems—treating text, image, and audio data through specialized processing paths, thereby improving output relevance for tasks like captioning, summarization, or prompt-based design generation.

In the realm of generative video and sound, Adobe is exploring customized motion and appearance controls, offering creators the ability to define how characters or scenes move and look in AI-generated animations. This positions Adobe to enhance motion graphics tools such as After Effects or Premiere Pro. Complementing video is multi-class audio separation, aimed at isolating distinct sound sources—such as voices, instruments, or ambient noise—for fine-tuned audio editing in Audition or Premiere.

Together, these innovations point to a comprehensive reimagining of Adobe Creative Cloud. The company is engineering a suite of interoperable, intelligent systems that extend across Photoshop, Illustrator, Premiere, Audition, and Firefly—transforming creativity into a fluid, AI-accelerated experience grounded in user intent, contextual understanding, and multi-format capability.

Here are some of the most telling developments:

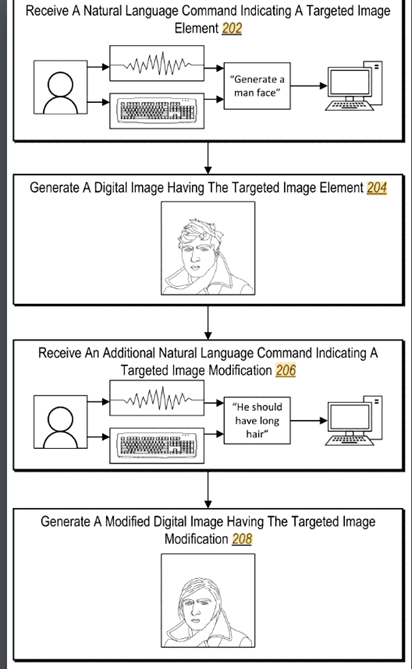

1. Interactive Generative Design Tools

Adobe has filed patents for a system that lets users interactively guide a generative neural network. Instead of just typing a prompt and hoping for the best, the system allows users to manipulate latent variables or provide input mid-process. It’s designed to give creators more control over the output and improve relevance.

Fig. 8: A process flow for generating and modifying digital images

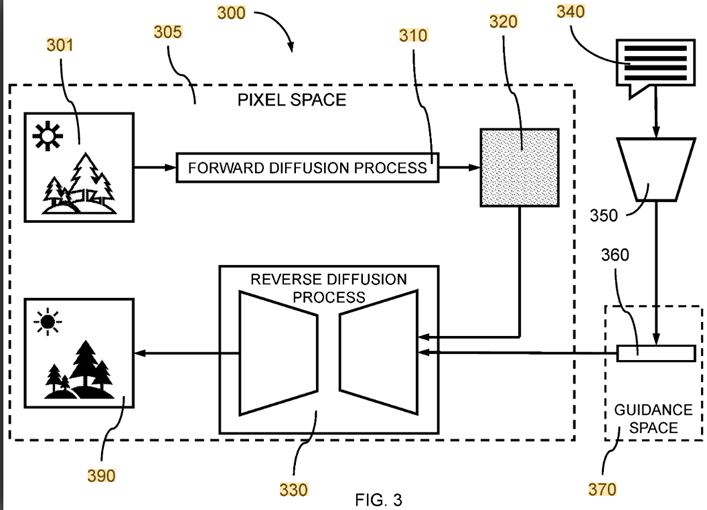

2. Scaling Diffusion Models with Fewer Artifacts

One of Adobe’s key filings improves diffusion models, the type used in image generation, by introducing continuous scaling with normalized layers. This addresses a common issue in diffusion-based generators: quality degradation when scaling to different resolutions.

Fig. 9: A block diagram of an example of a guided diffusion model

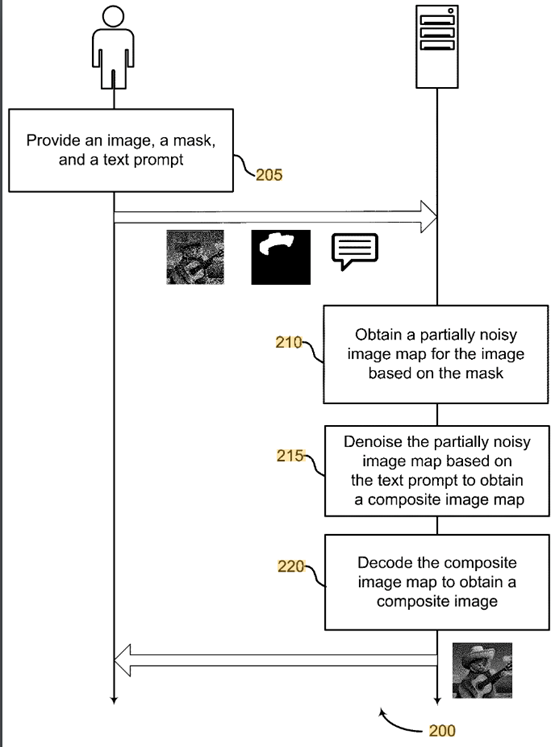

3. Multimodal Editing Using Sketches, Text, and Visual Prompts

Adobe has filed for a multimodal editing system that accepts text, sketch inputs, and example content to define transformation functions for image regions. The invention defines how different modalities are encoded, fused, and decoded through a single-generation pipeline.

Fig. 10: A method for multi-modal image editing

4. AI-Based Auto-Generation of Fillable Forms from Flat Documents

In the document intelligence domain, Adobe has submitted a patent for a system that extracts logical structure and form fields from unstructured documents using a layout-aware neural network. This enables generation of structured, fillable templates without human annotation.

Fig. 11: An illustrative representative fillable document template generation system

5. Semantic Object Removal in Digital Images

A patent application proposes a method for detecting and semantically removing distracting or undesired objects in digital images using generative inpainting models. The system identifies object boundaries and re-renders the background content based on learned scene priors.

Fig. 12: An overview diagram of the scene-based image editing system editing a digital image as a real scene.

Conclusion: Engineering the Future of Creativity

Adobe’s recent innovations point to a bold reimagining of digital creativity. Beneath the surface of its tools, a powerful framework is taking shape. Intelligent systems such as controllable diffusion models, multimodal editing interfaces, adaptive visual pipelines, and cloud-based creative environments are beginning to redefine what is possible.

This is not just a new set of features. It is a shift in how people create. In this emerging future, artificial intelligence does not replace the artist. It collaborates with them. Creation becomes more intuitive, more responsive, and more aligned with the creator’s intent. Whether through generating images from language, refining video through suggestion, or building brand-consistent assets in moments, Adobe is enabling a more fluid and expressive creative process.

These changes are not far off. They are already appearing in the next version of Firefly, in smarter tools within Photoshop and Premiere, and in the evolution of Creative Cloud into a connected, intelligent platform.

References:

Source 1: https://get.adobe.com/reader/

Comments